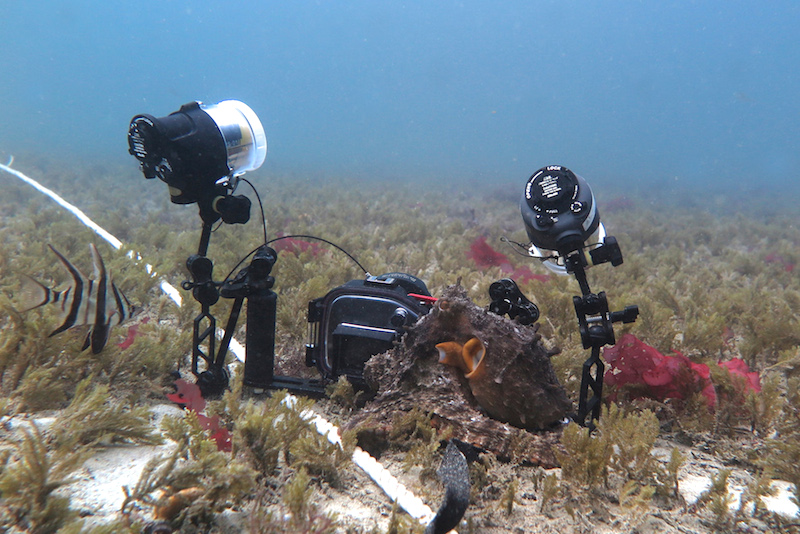

This octopus is inspecting the camera gear of my collaborator David Scheel at Octopolis, off the coast of Australia. Animal encounters machine. Did this feel like something for the octopus? How about for the machine?

“Integrated Information Theory” (IIT) is a theory of the physical basis of consciousness developed by the neuroscientist Giulio Tononi and endorsed also by Christof Koch. Tononi has been at work on it since around 2008. This year there’s a new paper about it in the Philosophical Transactions of the Royal Society, written by both of them.

Their theory has interesting connections to the project of this site: how can we know something about subjective experience in animals with nervous systems smaller and different in structure from our own? IIT is also a relative of panpsychism, which I wrote about in an earlier post. So let’s have a closer look.

Tononi and Koch start with what they take to be some central phenomenological features of consciousness (features we can experience from the first person point of view). They then try to work out what structural properties any physical system has to have to give rise to these features. They hypothesize that the result is a complete story about the basis of consciousness (complete not in all the details, but in solving the main problem). We then learn that consciousness comes in measurable degrees, and the gradient extends down to include quite simple systems, including some (but not all) machines. Consciousness does not require the biochemistry seen in living systems.

The details of their view, and its measure of consciousness, are not too important here. It’s hard to follow how it all works (as others have noted – see this blog post for a discussion I found helpful). “Information” is used in its broad mathematical sense, where it provides measures of uncertainty and its reduction. Any system in which some events are reliably associated with other events can be described in terms of information. A system contains integrated information when its future state depends on what many of the parts were doing a moment ago: we can’t break the system into a group of smaller sub-systems where the changes within these sub-systems are due just to factors internal to each one. Tononi’s “phi” (Φ) is a measure of how resistant a system is to being understood as a mere collection of subsystems in this way.

(Take the octopus and David’s camera. As it happened, there was little going on inside the camera, but suppose the video was running and it was making autofocus adjustments. Octopus and camera might each affect the other, but not that much. There is a complex and integrated system comprising the octopus, a more minimal one in the camera, and not enough interaction for us to treat the octopus-plus-camera as the system instead. You can know most of what the animal will do by just looking at the animal. Things would be different if the camera was wired into the octopus’ nervous system.)

Any system, with or without a brain, with or without senses, can have high “integrated information” if its workings are irreducible in the relevant sort of way. And integrated information, it is claimed, is all that’s needed for a system to be conscious. Scott Aaronson, in the blog post I cited above, notes that some kinds of pure number-crunching in a computer get a very high score on Tononi’s measure. Some of the language used by Tononi and Koch suggests that something more inherently psychological is required: “an experience is identical to a conceptual structure that is maximally irreducible intrinsically.” But they don’t mean “conceptual” in the usual sense. Any mechanism at all specifies a “concept.”

Why should we believe that any system that’s complex in this way – no matter what job it’s doing, no matter what it’s made of – has some degree of consciousness? Why believe this kind of complexity is all that matters?

Well – why not be bold and start with that simple view? This is often a good approach in science: sketch a simple view, test it, and see if you get led somewhere better. Simpler ideas are often more readily tested (as Karl Popper noted many years ago). When they are wrong, you can learn from their breakdown.

I think that some who support IIT like it for that reason. They want to start with a minimal model, then get to work and refine. But in fact the situation is different. In the approach sketched above, you propose something, test it, and can know when it fails, prompting refinement. Starting simple is good when data can push you towards a better view. In this case, though, there is no such test. If you extract the sheer “integratedness” of the brain’s activity and say that’s all that’s needed, the proposal just sits there, both very surprising and very hard to assess.

Tononi and Koch do not see things like this. IIT, they say, “makes a number of testable predictions.” For example, “A straightforward experimental prediction of IIT is that the loss and recovery of consciousness should be associated with the breakdown and recovery of the brain’s capacity for information integration. This prediction has been confirmed…” A second:

Another counterintuitive prediction of IIT is that if the efficacy of the 200 million callosal fibres through which the two cerebral hemispheres communicate with each other were reduced progressively, there would be a moment at which… there would be an all-or-none change in consciousness: experience would go from being a single one to suddenly splitting into two separate experiencing minds (one linguistically dominant), as we know to be the case with split-brain patients….

Here’s a more unusual one:

One counterintuitive prediction of IIT is that a system such as the cerebral cortex may generate experience even if the majority of its pyramidal neurons are nearly silent, a state that is perhaps approximated through certain meditative practices that aim at reaching ‘naked’ awareness without content.

However, even accepting all the empirical claims, these cases do not support IIT itself. All these cases have integrated information, and they have the usual biological setting – neurons, a living organism, the senses – as well. So predictions like this, whether they come out right or not, could not support the view that any highly integrated and complex system is conscious. They don’t support the view that integrated information is the only thing that matters.

Tononi and Koch might say: “What else could matter? In those cases where there’s a brain present, it’s integration that makes the difference between conscious and unconscious.”

I’d reply: the “else” is huge here. It includes all the goings-on within cells, learning, the special features of sensory experience, and more. Those things might not matter; it might all come down to something like informational integration. But Tononi and Koch don’t have cases where a prediction about consciousness can be made and then assessed, in a context lacking the biological embedding that I (and many others) think is important.

A more modest version of IIT might accept all this – it might treat IIT as a theory of some important features of brains and the activities within living animals. But the drama around IIT comes from its billing as a full, stand-alone theory of what makes a physical system conscious.

What to do? How can any view about non-neural bases for consciousness be tested? Are we again consigned to idle and unrestrained speculation? I don’t think so. This is a situation where the “gap” between mental and physical will be closed through a multi-pronged approach. On one side, we get a better and better sense of the structural features of experience. On the other side, we get a better and better account of the biology of subjectivity, and we work towards a mapping of the two. Integration might have some importance; IIT is not the only theory that makes use of it. The theories of Bernard Baars and Stanislas Dehaene are others. If integration (of the senses, of the senses with memory, of bodily feedback with everything else…) is indeed important, then information-theoretic measures might be useful in describing how this works. That’s very different from informational integration being the whole story, the bridge between mind and matter.

To close, here’s some video of octopus plus camera. The octopus tries to pick the camera up, probably to carry it home.

____________________

Notes:

I am a philosopher. People, including philosophers, sometimes say that a major task of philosophy is “methodological critique” of scientific work. That is not what I think. There is some methodological commentary in this post, but to me this is not essentially philosophical. I am assessing how Tononi and Koch’s ideas relate to the evidence they and others have given us. These issues connect to philosophical questions about the value of simplicity in theories (hence the nod to Karl Popper) but I reject the image of the philosopher as a sort of uninvited management consultant to science.

This recent New York Times article is quite good on the relations between brains and artificial systems. Tononi and Koch have published another major defense of their view here.

On the octopus front, this celebratory video has some excerpts from our Jervis Bay footage. In photo 3 above the diver is another collaborator, Matt Lawrence. The vertebrate keeping an eye on him and the octopus is a Banjo Ray (Trygonorrhina).